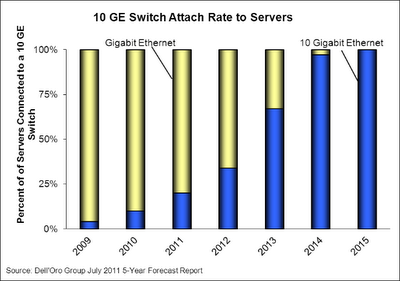

Dell'Oro predicts that the transition to majority 10 Gigabit Etherent (10 GE) server deployment will occur within the next two years and that most of the growth in networking sales will be of fixed configuration 10G switches.

Drivers for the move to 10G networking include:

- Multi-core processors and blade servers greatly increase computational density, creating a corresponding demand for bandwidth.

- Integration of 10G networking on server motherboards (see Intel's 10G 'Romley' server to spur Ethernet switch growth).

- Virtualization and server consolidation ensures that servers are fully utilized, further increasing demand for bandwidth.

- Increase in networked storage, spurred by virtualization, increases demand for bandwidth.

- Rapidly dropping price of 10G switches, driven by the availability of merchant silicon.

Top of rack switches have been treated as the poor step child of networking - deployed as a dumb access layer to the core switches where all the intelligence and control is applied. This approach works well if most of the data center traffic flows between the servers and the Internet - referred to as North-South traffic. However, the growth in converged storage and virtualization that is driving demand for bandwidth and the transition to 10G is also fundamentally altering traffic paths - most of the new traffic is local communication within the data center - referred to as East-West traffic. Examples of East-West traffic include: access to local storage, storage replication, virtual machine migration and scale-out clustered applications (like Hadoop).

|

| Image from 802.aq and Trill |

Most 10G top of rack switches now rely on merchant silicon. However, there are significant differences in which hardware features each vendor exposes and the ease with which these capabilities can be managed in order to create an intelligent edge.

|

| Image from Merchant silicon |

Choosing sFlow for performance monitoring provides the scalability to centrally monitor tens of thousands of 10G switch ports in the top of rack switches, as well as their 40 Gigabit uplink ports. In addition, sFlow is also available in 100 Gigabit switches, ensuring visibility as higher speed interconnects are deployed to support the growing 10 Gigabit edge.

Finally, the sFlow standard addresses the broader challenge of managing large, converged data centers by integrating network, server, storage and application performance monitoring to provide the comprehensive view of data center performance needed for effective control.