This article describes how to use the Containerlab DDoS testbed to simulate variety of flood attacks and observe the automated mitigation action designed to eliminate the attack traffic.

docker run --rm -it --privileged --network host --pid="host" \ -v /var/run/docker.sock:/var/run/docker.sock -v /run/netns:/run/netns \ -v ~/clab:/home/clab -w /home/clab \ ghcr.io/srl-labs/clab bashStart Containerlab.

curl -O https://raw.githubusercontent.com/sflow-rt/containerlab/master/ddos.ymlDownload the Containerlab topology file.

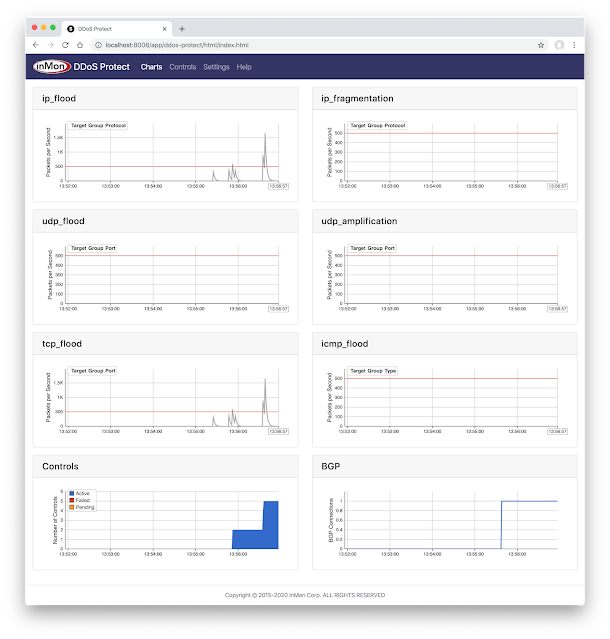

containerlab deploy -t ddos.ymlDeploy the topology and access the DDoS Protect screen at http://localhost:8008/app/ddos-protect/html/.

docker exec -it clab-ddos-sp-router vtysh -c "show bgp ipv4 flowspec detail"

At any time, run the command above to see the BGP Flowspec rules installed on the sp-router. Simulate the volumetric attacks using hping3.

Note: While the hping3 --rand-source option to generate packets with random source addresses would create a more authentic DDoS attack simulation, the option is not used in these examples because the victims responses to the attack packets (ICMP Port Unreachable) will be sent back to the random addresses and may leak out of the Containerlab test network. Instead varying source / destination ports are used to create entropy in the attacks.

When you are finished trying the examples below, run the following command to stop the containers and free the resources associated with the emulation.

containerlab destroy -t ddos.yml

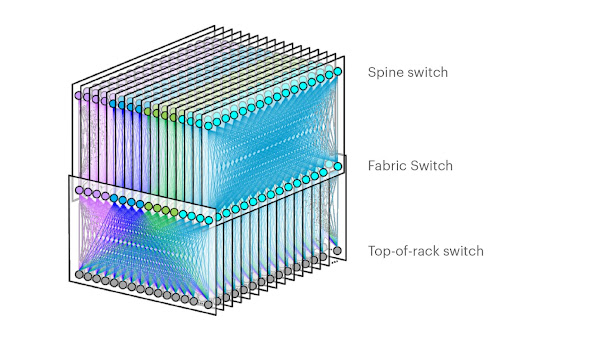

Moving the DDoS mitigation solution from Containerlab to production is straighforward since sFlow and BGP Flowspec are widely available in routing platforms. The articles Real-time DDoS mitigation using BGP RTBH and FlowSpec, DDoS Mitigation with Cisco, sFlow, and BGP Flowspec, DDoS Mitigation with Juniper, sFlow, and BGP Flowspec, provide configuration examples for Arista, Cisco, Juniper routers respectively.

IP Flood

IP packets by target address and protocol. This is a catch all signature that will catch any volumetric attack against a protected address. Setting a high threshold for the generic flood attack allows the other, more targetted, signatures to trigger first and provide a more nuanced response.

docker exec -it clab-ddos-attacker hping3 \ --flood --rawip -H 47 192.0.2.129Launch simulated IP flood attack against 192.0.2.129 using IP protocol 47 (GRE).

BGP flowspec entry: (flags 0x498) Destination Address 192.0.2.129/32 IP Protocol = 47 FS:rate 0.000000 received for 00:00:12 not installed in PBR

Resulting Flowspec entry in sp-router. The filter blocks all IP protocol 47 (GRE) traffic to the targetted address 192.0.2.129.

IP Fragmentation

IP fragments by target address and protocol. There should be very few fragmented packets on a well configured network and the attack is typically designed to exhaust host resources, so a low threshold can be used to quickly mitigate these attacks.

docker exec -it clab-ddos-attacker hping3 \ --flood -f -p ++1024 192.0.2.129Launch simulated IP fragmentation attack against 192.0.2.129.

BGP flowspec entry: (flags 0x498) Destination Address 192.0.2.129/32 IP Protocol = 6 Packet Fragment = 2 FS:rate 0.000000 received for 00:00:10 not installed in PBR

Resulting Flowspec entry in sp-router. The filter blocks fragemented packets to the targetted address 192.0.2.129.

ICMP Flood

ICMP packets by target address and type. Examples include Ping Flood and Smurf attacks.

docker exec -it clab-ddos-attacker hping3 \ --flood --icmp -C 0 192.0.2.129Launch simulated ICMP flood attack using ICMP type 0 (Echo Reply) packets against 192.0.2.129.

BGP flowspec entry: (flags 0x498) Destination Address 192.0.2.129/32 IP Protocol = 1 ICMP Type = 0 FS:rate 0.000000 received for 00:00:13 not installed in PBR

Resulting Flowspec entry in sp-router. The filter blocks ICMP type 0 packets to the targetted address 192.0.2.129.

UDP Flood

UDP packets by target address and destination port. The UDP flood attack can be designed to overload the targetted service or exhaust resources on middlebox devices. A UDP flood attack can also trigger a flood of ICMP Destination Port Unreachable responses from the targetted host to the, often spoofed, UDP packet source addresses.

docker exec -it clab-ddos-attacker hping3 \ --flood --udp -p 53 192.0.2.129Launch simulated UDP flood attack against port 53 (DNS) on 192.0.2.129.

BGP flowspec entry: (flags 0x498) Destination Address 192.0.2.129/32 IP Protocol = 17 Destination Port = 53 FS:rate 0.000000 received for 00:00:13 not installed in PBR

Resulting Flowspec entry in sp-router. The filter blocks UDP packets with destination port 53 to the targetted address 192.0.2.129.

UDP Amplification

UDP packets by target address and source port. Examples include DNS, NTP, SSDP, SNMP, Memcached, and CharGen reflection attacks.

docker exec -it clab-ddos-attacker hping3 \ --flood --udp -k -s 53 -p ++1024 192.0.2.129

Launch simulated UDP amplification attack using port 53 (DNS) as an amplifier to target 192.0.2.129.

BGP flowspec entry: (flags 0x498) Destination Address 192.0.2.129/32 IP Protocol = 17 Source Port = 53 FS:rate 0.000000 received for 00:00:43 not installed in PBR

Resulting Flowspec entry in sp-router. The filter blocks UDP packets with source port 53 to the targetted address 192.0.2.129.

TCP Flood

TCP packets by target address and destination port. A TCP flood attack can also trigger a flood of ICMP Destination Port Unreachable responses from the targetted host to the, often spoofed, TCP packet source addresses.

This signature does not look at TCP flags, for example to identify SYN flood attacks, since Flowspec filters are stateless and filtering all packets with the SYN flag set would effectively block all connections to the target host. However, this control can help mitigate large volume TCP flood attacks (through the use of a limit or redirect Flowspec action) so that the traffic doesn't overwhelm layer 4 mitigation running on load balancers or hosts, using SYN cookies for example.

docker exec -it clab-ddos-attacker hping3 \ --flood -p 80 192.0.2.129

Launch simulated TCP flood attack against port 80 (HTTP) on 192.0.2.129.

BGP flowspec entry: (flags 0x498) Destination Address 192.0.2.129/32 IP Protocol = 6 Destination Port = 80 FS:rate 0.000000 received for 00:00:17 not installed in PBR

Resulting Flowspec entry in sp-router. The filter blocks TCP packets with destination port 80 to the targetted address 192.0.2.129.

TCP Amplification

TCP SYN-ACK packets by target address and source port. In this case filtering on the TCP flags is very useful, effectively blocking the reflection attack, while allowing connections to the target host. Recent examples target vulerable middlebox devices to amplify the TCP reflection attack.

docker exec -it clab-ddos-attacker hping3 \ --flood -k -s 80 -p ++1024 -SA 192.0.2.129Launch simulated TCP amplification attack using port 80 (HTTP) as an amplifier to target 192.0.2.129.

BGP flowspec entry: (flags 0x498) Destination Address 192.0.2.129/32 IP Protocol = 6 Source Port = 80 TCP Flags = 18 FS:rate 0.000000 received for 00:00:11 not installed in PBR

Resulting Flowspec entry in sp-router. The filter blocks TCP packets with source port 80 to the targetted address 192.0.2.129.