|

| Protectli Vault - 4 Port |

The VyOS 1.4.0 (Sagitta) LTS release announcement is exciting news! VyOS is an open source router operating system based on Linux that can be installed on commodity PC hardware - for optimal performance at least 1GB RAM and 4GB of storage space is recommended.

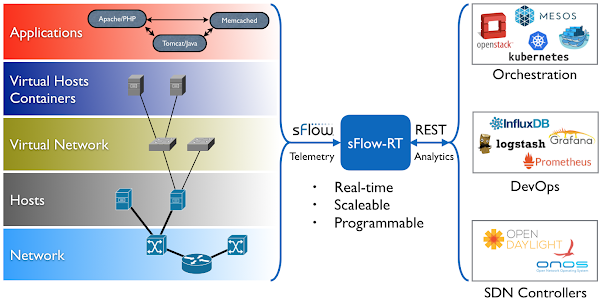

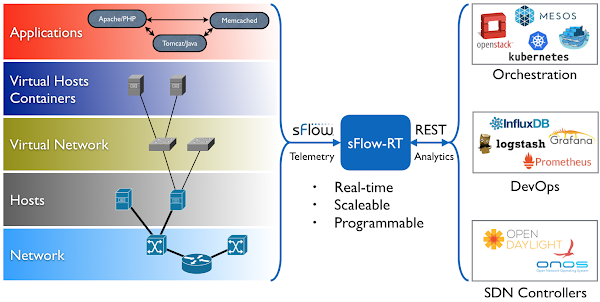

The new 1.4 LTS release includes a significantly enhanced implementation of industry standard sFlow telemetry based on the open source Host sFlow agent.

set system sflow interface eth0 set system sflow interface eth1 set system sflow interface eth2 set system sflow interface eth3 set system sflow polling 30 set system sflow sampling-rate 1000 set system sflow drop-monitor-limit 50 set system sflow server 192.0.2.100Enter the commands above to enable sFlow monitoring on interfaces eth0, eth1, eth2, and eth3. Interface counters will be exported every 30 seconds, packets will be sampled with probability 1/1000, and up to 50 packet headers (and drop reasons) per second will collected from packets dropped by the router. The sFlow telemetry stream will be sent to an sFlow collector at 192.0.2.100.

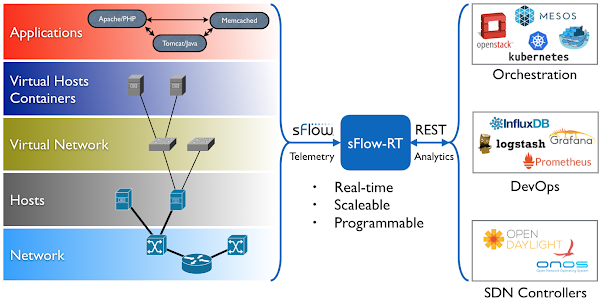

Running Docker on the sFlow collector makes it easy to run a variety of sFlow analytics tools.

docker run --rm -p 6343:6343/udp sflow/sflowtoolRun the sflow/sflowtool image to decode and print the contents of the sFlow telemetry stream and verify receipt of data.

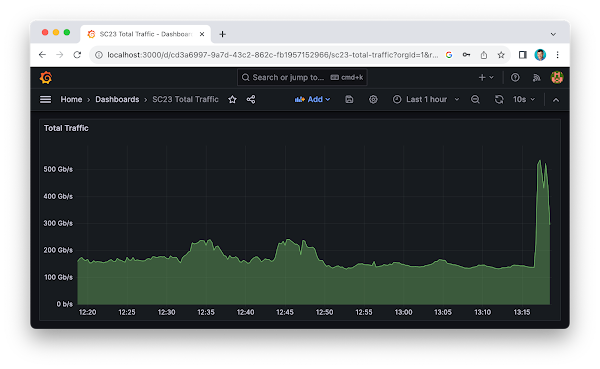

docker run --rm -p 6343:6343/udp sflow/tcpdump tcp port 80Run the sflow/tcpdump image to decode and filter sampled packet headers. For more complex packet analysis tasks, try the sflow/tshark image. Run the sflow/sflowtrend image to trend interface counters and top flows. Deploy real-time network dashboards using Docker compose describes how to configure Prometheus and Grafana to capture time series data and create custom dashboards. Dropped packet reason codes in VyOS describes how the new Linux kernel in VyOS 1.4 provides detailed visibility into every dropped packet (including the reason it was dropped). This cabability is used by the new sFlow agent implement the sFlow Dropped Packet Notification Structures extension to provide network-wide visibility into dropped packets.

Download VyOS today to try out the new features. Pre-built LTS images are available with paid support, but anyone can build an image from sources or download the latest rolling release.